AI Denoising for X-ray and Nuclear Tomography*

Over the past decade, artificial intelligence (AI), especially deep learning (DL), has generated transformative impacts on various fields. Medical imaging is no exception, with deep tomographic reconstruction research started in 2016[1]. This article aims to shed light on deep learning-based image denoising techniques, with primary examples of X-ray computed tomography (CT) and positron emission tomography (PET) (Figure 1). For medical professionals, patients, their family members and friends, it is crucial to understand the nuances of image noise reduction. This article is intended for well-educated readers who are interested in getting rid of image noise for improved diagnostic performance. The focus will be placed on the ideas of modern AI denoising technologies, without delving into the technical intricacies[2].

Image denoising is a crucial step in medical imaging, aimed at enhancing the quality of medical images obtained using various imaging modalities. In the context of CT and PET (similarly, also single photon emission CT or SPECT), denoising is about suppressing or eliminating troublesome image noise to improve accuracy and reliability of the diagnostic information these images provide.

It is well known that CT scans are widely used to produce cross-sectional, volumetric and even dynamic images of human anatomy. Currently, there are about 375 million CT scans performed worldwide with a yearly growth rate of 3-4%[4]. One of the primary challenges in X-ray imaging is the use of ionizing radiation that potentially causes genetic damage and induces cancer at a small but not negligible chance. Thus, it is highly desirable to reduce the radiation dose as much as feasible, reaching an insignificant amount comparable to or less than the natural background radiation level. This requirement is expressed as the ALARA principle (“As Low As Reasonably Achievable”)[5] (Figure 2). Therefore, low-dose CT research has been a major theme in the medical imaging field. The reduction of the radiation dose used in a CT scan will increase inherent noise in X-ray projection data and resultant images. Naturally, stronger noise can obscure more details and degrade diagnostic performance.

Complementary to CT, nuclear tomography such as PET and SPECT provide functional images of organs and tissues. They are instrumental in diagnosing and monitoring various conditions, especially in oncology, cardiology, and neurology. Like CT, PET and SPECT also use ionizing radiation but the involved radiation comes from radioactive pharmaceuticals introduced into the patient body, instead of an X-ray tube externally irradiating a patient. By the same reason we mentioned for CT, the radiation dose for either PET or SPECT should be also minimized. Consequently, low-dose nuclear imaging techniques suffer from image noise issues and demand denoising research as well.

Image denoising is extremely important for CT, PET and SPECT. As we know, filtered backprojection had been the image reconstruction method of choice for several decades, and produces excellent image quality if signal-to-noise ratios are sufficiently high and projection data are complete. However, image reconstruction from either a low-dose CT scan or a PET/SPECT scan using filtered backprojection will give a very noisy image appearance because the derivation of the filtered backprojection formula assumes no noise in projection data. To address this challenge, model-based iterative image reconstruction algorithms were developed[6]. These iterative algorithms improve image quality significantly but unfortunately also induce secondary image artifacts, take long computational time, and are not clinically satisfactory in many challenging cases.

Fortunately, DL methods emerged to the rescue. DL, a traditional sub-area of AI and machine learning (ML), has now become the mainstream of medical imaging today[7]. By leveraging large datasets, deep artificial neural networks and high-performance computing resources, we can learn to identify and reduce noise in medical images.

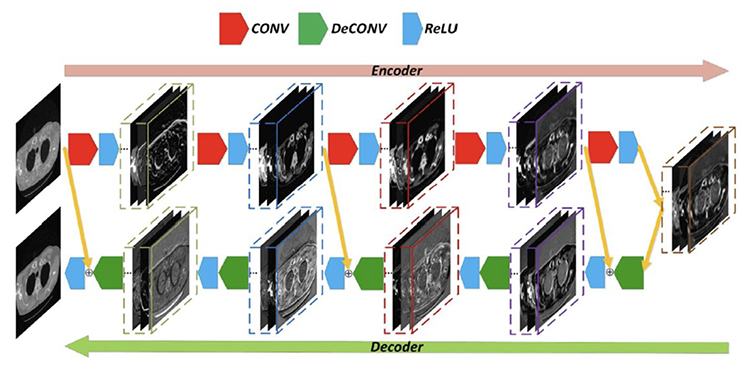

At its core, deep learning involves training a deep artificial network consisting of many layers of artificial neurons. Such a neuron takes multiple scalar inputs, weights each of them, and then computes a weighted sum. After this linear operation, the weighted sum is nonlinearly processed into a scalar output, which reflects how much the neuron is activated (with a high value) or deactivated (with a low value). The training process of a deep neural network uses big data and extensive computation to optimize all the weights of the network through minimizing an objective function; for example, to make sure that a denoised low-dose CT (LDCT) image resembles the ground truth normal-dose CT (NDCT) image as closely as possible. One of the earliest deep network for low-dose CT image denoising is RED-CNN (“Residual Encoder-Decoder Convolutional Neural Network”) (Figure 3)[8], which produces impressive results (Figure 4)[8].

For LDCT denoising research, RED-CNN has been widely-cited as a benchmark for performance evaluation or a baseline for performance improvement (1,400 citations since 2017). As an example, the RED-CNN network was combined with a classic algorithm by a team from Capital Normal University (Beijing, China), which won the 1st place in the 2022 AAPM Spectral CT Challenge[9] (“Team GM_CNU developed the winning algorithm. In each module, both algebraic reconstruction and CNN processing steps are included. The particular CNN used is based on RED-CNN”[9]).

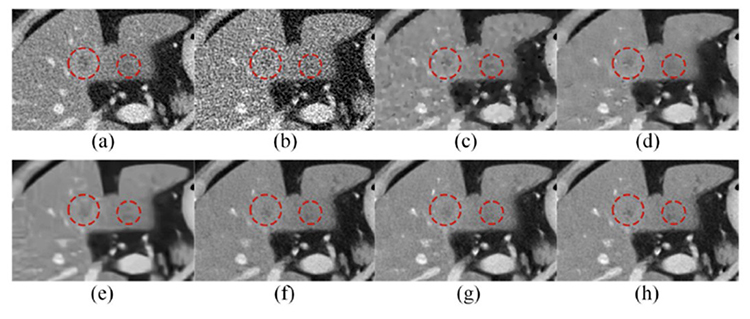

As shown in Figure 4, despite excellent denoising performance of RED-CNN, the resultant image seems somehow over-smoothened (Figure 4, (a) versus (h)). An interesting progress is to introduce a perceptual loss so that the denoising result keeps textural features of interest. For that purpose, the first deep network WGAN (where “W” means the Wasserstein Distance) with a perceptual loss was designed by extending the well-known generative adversarial network (GAN)[10] (Figure 5). In addition to the established image generation mechanism of GAN, a perception of textural image quality is computed in the WGAN network to guide the image denoising process and produce a visually impressive performance, clearly outperforming model-based iterative reconstruction (Figure 5, (c) versus (d))[10] (over 1,300 citations since 2018).

Based on the promising results of deep learning-based low-dose CT denoising results, a double-blind study was performed to compare deep learning and commercial iterative reconstruction systematically. Specifically, a modularized neural network for LDCT denoising was designed at Rensselaer Polytechnic Institute and compared with commercial iterative reconstruction methods from three leading CT vendors. This dedicated network performs an end-to-process mapping so that intermediate denoised images are obtained all the way towards a final denoised image, allowing radiologists in the loop to optimize the denoising depth in a task-specific fashion (Figure 6). The network was trained with the Mayo LDCT Dataset and tested on separate chest and abdominal CT exams from Massachusetts General Hospital. The results show that in most cases deep denoising is either superior or comparable to the commercial iterative LDCT denoising results[11].

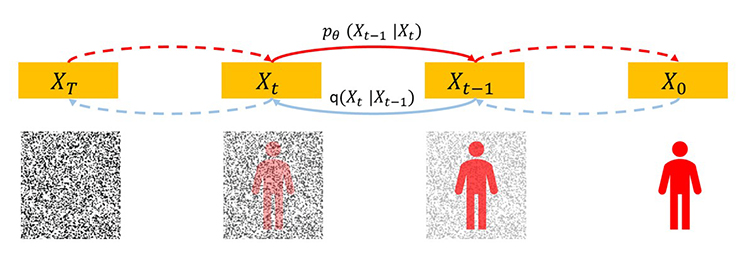

Given the importance of low-dose imaging, sustained research efforts are being made for even better denoising performance. Recently, the diffusion model has attracted a major attention in the medical imaging field, delivering state-of-the-art results in many imaging tasks including image reconstruction and denoising[12].

Diffusion models, such as the DDPM (Denoising Diffusion Probabilistic Model), are a deep neural network, and can be used to create images[12]. Given a clear picture in a large training dataset, imagine adding random noise to this picture step by step until it becomes a Gaussian noise field. Then, this process can be followed in a reverse direction. Given a pure noise picture sampled from a high-dimensional Gaussian distribution, imagine removing a small amount of noise from this picture step by step until it becomes a meaningful picture. In the magic reverse process, the key is to have a score function so that we know how to remove noise meaningfully in each step (Figure 7). This score function is a deep network that we can train on the training dataset. The trained network can synthesize realistic pictures under the same distribution of the training dataset. Thus, the diffusion model represents the most comprehensive knowledge of the training dataset and has a great potential to guide many imaging tasks including image denoising.

Recently, we obtained exciting image denoising results using the diffusion model approach in collaboration with General Electric collaborators (Figure 8, the top row)[13] (paper D1-1-2). In the unsupervised mode, we trained the diffusion model on realistically simulated high-quality CT images so that we can have a high-quality image denoising result under the condition of a noisy and blurry CT image (Figure 8(a)). It is underlined that the supervised denoising result (Figure 8(b)) is not better than this unsupervised denoising counterpart (Figure 8(c)) as compared to the ground truth (Figure 8(d)). In parallel, Prof. Chi Liu’s group at Yale also adapted the diffusion model for low-dose PET denoising (Figure 8, the bottom row)[14]. Specifically, a low-count PET input (Figure 8(e)) was processed to produce denoising results using a typical convolutional neural network (CNN) (Figure 8(f)) and the adapted diffusion model (Figure 8(g)). Clearly, the diffusion model outperformed CNN, as compared with the ground truth (Figure 8(h)).

Despite its evident benefits, deep learning also faces challenges in medical image denoising. These include the need for large and diverse datasets for training, concerns about data privacy, and the regulation for integrating these technologies into existing medical workflows. Efforts are underway to develop guidelines and policies that ensure the safe, fair and rapid adaption of AI in healthcare. Clearly, the continued advancement of deep learning technologies promises even a bright future of medical imaging with a much-improved quality of life for all of us on the earth[15].

*This article is partially based on Ge Wang’s IEEE NPSS Hoffman Imaging Scientist Award presentation, Nov. 8, 2023 (https://www.youtube.com/watch?v=iaP5uIBYmGE&t=76s or https://www.bilibili.com/video/BV1ig4y1Z7aJ/?spm_id_from=333.999.0.0&vd_source=e1958ef1bfc229837f46ee321f509b10). Dr. Hongming Shan proofread the article and made several refinements.

References

[1]G. Wang, “A Perspective on Deep Imaging,” IEEE Access, vol. 4, pp. 8914–8924, 2016, doi: 10.1109/ACCESS.2016.2624938.

[2]Y. Lei, C. Niu, J. Zhang, G. Wang, and H. Shan, “CT Image Denoising and Deblurring With Deep Learning: Current Status and Perspectives,” IEEE Trans. Radiat. Plasma Med. Sci., pp. 1–1, 2023, doi: 10.1109/TRPMS.2023.3341903.

[3]K. Vishal, “ChatGPT-4 Turbo, All you need to know.,” Medium. Accessed: Dec. 21, 2023. [Online]. Available: https://medium.com/@kumar.vishal9626/chatgpt-4-turbo-all-you-need-to-know-e141e644bcf4

[4]“Global CT Equipment Procedure Volumes and Reimbursement Growth,” Imaging Technology News. Accessed: Dec. 23, 2023. [Online]. Available: http://www.itnonline.com/content/global-ct-equipment-procedure-volumes-and-reimbursement-growth

[5]CDC, “ALARA – As Low As Reasonably Achievable,” Centers for Disease Control and Prevention. Accessed: Dec. 21, 2023. [Online]. Available: https://www.cdc.gov/nceh/radiation/alara.html

[6]L. R. Koetzier et al., “Deep Learning Image Reconstruction for CT: Technical Principles and Clinical Prospects,” Radiology, vol. 306, no. 3, p. e221257, Mar. 2023, doi: 10.1148/radiol.221257.

[7]G. Wang, J. C. Ye, and B. De Man, “Deep learning for tomographic image reconstruction,” Nat. Mach. Intell., vol. 2, no. 12, Art. no. 12, Dec. 2020, doi: 10.1038/s42256-020-00273-z.

[8]H. Chen et al., “Low-Dose CT With a Residual Encoder-Decoder Convolutional Neural Network,” IEEE Trans. Med. Imaging, vol. 36, no. 12, pp. 2524–2535, Dec. 2017, doi: 10.1109/TMI.2017.2715284.

[9]E. Y. Sidky and X. Pan, “Report on the AAPM deep-learning spectral CT Grand Challenge,” Med. Phys., vol. n/a, no. n/a, doi: 10.1002/mp.16363.

[10]Q. Yang et al., “Low-Dose CT Image Denoising Using a Generative Adversarial Network With Wasserstein Distance and Perceptual Loss,” IEEE Trans. Med. Imaging, vol. 37, no. 6, pp. 1348–1357, Jun. 2018, doi: 10.1109/TMI.2018.2827462.

[11]H. Shan et al., “Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction,” Nat. Mach. Intell., vol. 1, no. 6, Art. no. 6, Jun. 2019, doi: 10.1038/s42256-019-0057-9.

[12]A. Kazerouni et al., “Diffusion models in medical imaging: A comprehensive survey,” Med. Image Anal., vol. 88, p. 102846, Aug. 2023, doi: 10.1016/j.media.2023.102846.

[13]C. Huang et al., “Proceedings of the 17th International Meeting on Fully 3D Image Reconstruction in Radiology and Nuclear Medicine.” arXiv, Nov. 03, 2023. doi: 10.48550/arXiv.2310.16846.

[14]H. Xie et al., “DDPET-3D: Dose-aware Diffusion Model for 3D Ultra Low-dose PET Imaging.” arXiv, Nov. 28, 2023. doi: 10.48550/arXiv.2311.04248.

[15]G. Wang et al., “Development of metaverse for intelligent healthcare,” Nat. Mach. Intell., vol. 4, no. 11, Art. no. 11, Nov. 2022, doi: 10.1038/s42256-022-00549-6.